At the beginning of this month, Adrian Holovaty, the founder of the music-teaching platform Soundslice, finally solved a mystery that had been puzzling him for several weeks. The enigma revolved around strange images of ChatGPT sessions that were continuously being uploaded to his website.

Upon solving the mystery, Holovaty discovered that ChatGPT had inadvertently become a significant promoter of his company, albeit while providing misinformation about the capabilities of his application.

Holovaty is renowned for being one of the co-creators of the open-source Django project, a widely-used Python web development framework. Although he stepped down from managing the project in 2014, he has continued to work on other ventures. In 2012, he launched Soundslice, which has remained “proudly bootstrapped,” as he mentioned to TechCrunch. Currently, his focus is divided between his music career, both as an artist and as a founder.

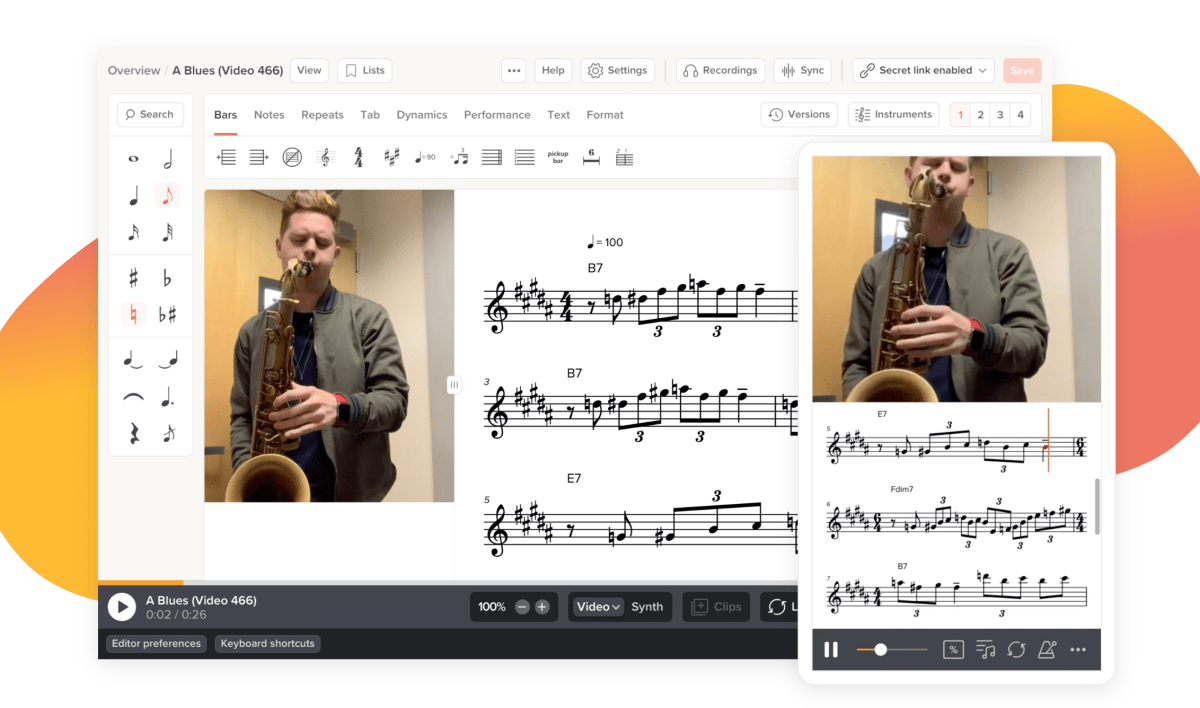

Soundslice is an application designed for teaching music, utilized by both students and teachers. It is known for its video player, which is synchronized with music notations that guide users on how to play the notes.

The platform also features a “sheet music scanner” that allows users to upload images of paper sheet music and, using AI, automatically converts them into interactive sheets complete with notations.

Holovaty closely monitors the error logs for this feature to identify areas where improvements can be made.

It was through these error logs that he first encountered the uploaded ChatGPT sessions.

These sessions were generating a significant number of error logs, as they consisted of images of text and ASCII tablature, a basic text-based system used for guitar notations that employs a standard keyboard.

The volume of these uploaded images was not substantial enough to incur significant storage costs or affect the app’s bandwidth. However, Holovaty was perplexed by the situation, which he described in a blog post.

“Our scanning system was not designed to support this style of notation. I was baffled as to why we were receiving so many ASCII tab ChatGPT screenshots — until I experimented with ChatGPT myself.”

Through his experimentation, Holovaty discovered that ChatGPT was informing users that they could hear the music by opening a Soundslice account and uploading the image of the chat session. However, this was not possible, as uploading those images would not translate the ASCII tab into audible notes.

This realization introduced a new problem. “The primary concern was reputational: New Soundslice users were creating accounts with false expectations, having been confidently told that we could do something that we don’t actually do,” Holovaty explained to TechCrunch.

He and his team weighed their options: They could add disclaimers throughout the site stating that the platform cannot convert ChatGPT sessions into audible music, or they could develop the feature to support this offbeat musical notation system, despite never having considered it before.

Ultimately, Holovaty decided to build the feature.

“My feelings on this matter are mixed. I’m pleased to add a tool that assists people, but I feel like our hand was forced in an unusual way. Should we really be developing features in response to misinformation?” he wrote.

He also wondered if this was the first documented instance of a company being compelled to develop a feature due to ChatGPT’s repeated assertions about it to numerous people.

Programmers on Hacker News had an interesting perspective on the matter: Several of them noted that it’s no different from an overzealous human salesperson promising the world to prospects and then forcing developers to deliver new features.

“I think that’s a very apt and amusing comparison!” Holovaty agreed.

Source Link