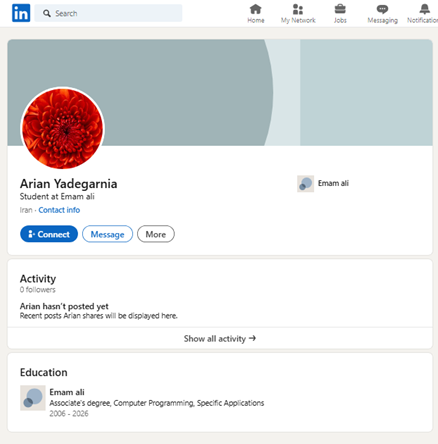

Microsoft has filed an amended complaint in a recent civil lawsuit, naming the primary developers of malicious tools designed to bypass the safeguards of generative AI services, including its Azure OpenAI Service. The company is taking this legal action against identified defendants to halt their activities, dismantle their illicit operation, and deter others from misusing AI technology. The individuals named in the complaint are Arian Yadegarnia, Alan Krysiak, Ricky Yuen, and Phát Phùng Tấn, who are allegedly part of a global cybercrime network known as Storm-2139.

Storm-2139 exploited exposed customer credentials to access accounts with certain generative AI services, altered the capabilities of these services, and resold access to other malicious actors. They provided detailed instructions on how to generate harmful and illicit content, including non-consensual intimate images of celebrities and other explicit content. This activity is prohibited under the terms of use for Microsoft’s generative AI services and required deliberate efforts to bypass the company’s safeguards. Microsoft has chosen not to name specific celebrities to protect their identities and has excluded synthetic imagery and prompts from its filings to prevent further harm.

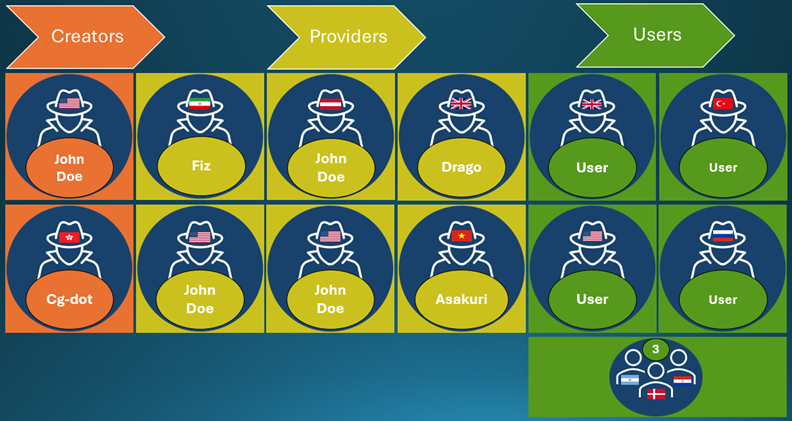

Storm-2139: A Global Network of Creators, Providers, and End Users

In December 2024, Microsoft’s Digital Crimes Unit filed a lawsuit in the Eastern District of Virginia against 10 unidentified individuals participating in activities that violate U.S. law and Microsoft’s Acceptable Use Policy and Code of Conduct. The lawsuit enabled Microsoft to gather more information about the operations of the criminal enterprise. Storm-2139 is organized into three main categories: creators, providers, and users. Creators developed the illicit tools that enabled the abuse of AI-generated services, while providers modified and supplied these tools to end users, often with varying tiers of service and payment. Users then used these tools to generate violating synthetic content, often centered around celebrities and sexual imagery.

A visual representation of Storm-2139 displays internet aliases uncovered as part of the investigation, as well as the countries where the associated personas are believed to be located. Microsoft has identified several personas, including the four named defendants, and is preparing criminal referrals to U.S. and foreign law enforcement representatives.

Cybercriminals React to Microsoft’s Website Seizure and Court Filing

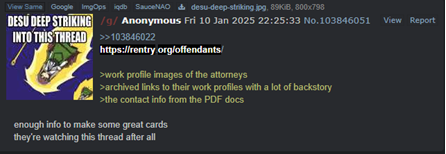

As part of the initial filing, the court issued a temporary restraining order and preliminary injunction, enabling Microsoft to seize a website instrumental to the criminal operation. The seizure of this website and subsequent unsealing of the legal filings generated an immediate reaction from actors, with some group members turning on and pointing fingers at one another. Microsoft observed chatter about the lawsuit on the group’s monitored communication channels, speculating on the identities of the “John Does” and potential consequences.

Certain members also “doxed” Microsoft’s counsel of record, posting their names and other personal information. This reaction underscores the impact of Microsoft’s legal actions and demonstrates how these measures can effectively disrupt a cybercriminal network by seizing infrastructure and creating a powerful deterrent impact among its members.

Microsoft’s counsel received various emails, including several from suspected members of Storm-2139 attempting to cast blame on other members of the operation. The company is committed to protecting users by embedding robust AI guardrails and safeguarding its services from illegal and harmful content.

Continuing Our Commitment to Combating the Abuse of Generative AI

Microsoft takes the misuse of AI very seriously and recognizes the serious and lasting impacts of abusive imagery for victims. The company remains committed to protecting users and has published a comprehensive approach to combat abusive AI-generated content. Microsoft has also provided an update on its approach to intimate image abuse, detailing the steps it takes to protect its services from harm. By unmasking these individuals and shining a light on their malicious activities, Microsoft aims to set a precedent in the fight against AI technology misuse.

Disrupting malicious actors requires persistence and ongoing vigilance. Microsoft will continue to innovate and find new ways to keep users safe. The company has committed to continuing to innovate on new ways to keep users safe and has outlined a comprehensive approach to combat abusive AI-generated content.

Source Link