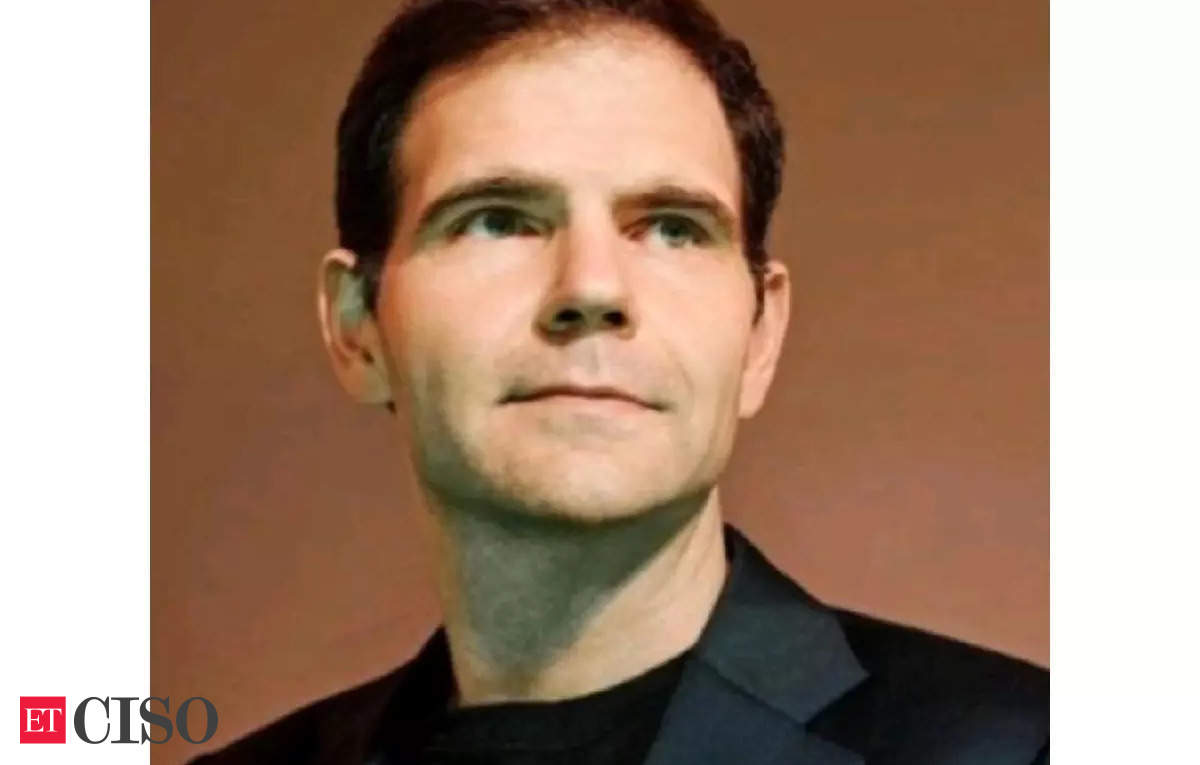

India should prioritize the development of artificial intelligence applications and enhance existing models, rather than investing heavily in the creation of foundational models and AI chips, according to Jonathan Ross, cofounder and CEO of Groq, a Silicon Valley-based AI chip startup that challenges Nvidia. The company aims to reduce the cost of AI by scaling the inferencing infrastructure and has created a peering network between India and its data center in Saudi Arabia, which has made 19,000 AI inferencing clusters available within eight days, Ross explained in an interview with ET. Ross, who co-developed the Tensor Processing Unit (TPU) at Google, expressed mild annoyance at the confusion between Groq and Elon Musk’s AI models ‘Grok’, and stated that the company has sent a ‘cease and desist’ notice to Musk, requesting him to stop using the name.

Edited excerpts:

What are your thoughts on the Indian market?

With AI, India has a population advantage, as 1.5 billion people who are already computer-literate can utilize their entrepreneurial skills to create new things.

Since you don’t need to learn software, India is in a unique position. Many others have built training hardware, and now those models are being given away for free. If I were India, I would focus on collecting and fine-tuning these models for the 25 languages spoken in the country, and then use them for inference.

Should India develop its own foundational model?

That could be a backup plan, but other than Google, Meta, and Microsoft, everyone has abandoned building their own models. They have become a commodity, and training takes six months, while new models are released every month. It’s more practical to take existing models, fine-tune them, and build upon them.

From a hardware perspective, India has less than 2% of the world’s compute capacity…

Developing countries often have better infrastructure than the US. You’re not stuck with outdated technology, so you can build something new and potentially gain an advantage. I would focus on building AI infrastructure that is scalable and economical, and then replicate it worldwide.

India is also working on developing its own AI chip. What are your thoughts on this?

We’re willing to build chips wherever the fabs are. If India had fabs, we’d build them here. However, replicating everything that’s been done worldwide might not be the best use of resources. Why not focus on winning in a specific area instead of replicating something that’s already been done? Building fabs would require a $100-500 billion effort. Wouldn’t it be better to use that money to make India’s population productive and able to build their own businesses?

How do you see the relationship between the rise of agentic AI and the need for inferencing hardware, especially for an application-focused country like India?

We have over 150,000 developers in India using our platform, console.groq.com, for free. We give away more tokens per day than Google Cloud Platform. The US is supposed to be leading in AI, but India is catching up quickly because creating and using AI models are different things. Most developers on our platform have never trained a model in their life; they just use existing ones.

India should focus on using models that others have created, fine-tune them for the local market, and build upon them. The country is cost-sensitive, which is ideal for us, as we want to bring the cost of intelligence to zero. We’re working on peering arrangements to utilize the capacity of our 19,000 chips in Saudi Arabia and might deploy some in India.

How do you see competition from companies like Nvidia?

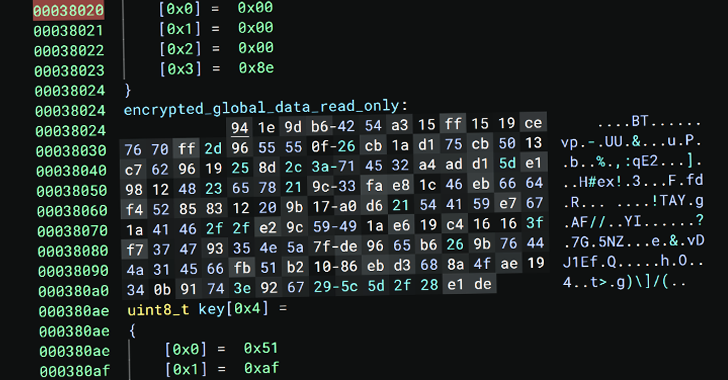

We don’t believe in building something competitive by copying others. Nvidia has built ideal chips for training, and we can’t do better than that. Instead, we want to make thinking (inferencing of models) faster. Our system is 20 times faster than a GPU.

How often do people confuse your company with Elon Musk’s Grok model?

The confusion is real, but we have the trademark and legal protection. We sent a ‘cease and desist’ notice to Elon Musk, asking him to stop using the name. He claimed he named it after ‘Hitchhiker’s Guide to the Galaxy’, but he got the book wrong. We suggested he rename it Slartibartfast, a character from the book he mentioned.

What does the DeepSeek moment mean for AI hardware?

We’ve been discussing the Jevons paradox, which states that the lower the cost of intelligence, the more people will buy. I think the amount spent on training will increase, but the cost of running an LLM hasn’t changed much in the last year. The cost of equivalent intelligence has actually decreased.

Do you think shutting out China will hinder AI innovation going forward?

India has banned TikTok due to data security concerns. We decided to host the DeepSeek model on our hardware to prevent sensitive data from going to China. Groq is clear about data privacy; we don’t retain people’s data and don’t have hard drives to store it.

Remember, they did innovation by distilling the OpenAI model and collecting techniques that had already been developed but not used by open-source models. That was going to happen soon, but they got there first. They benefited from what OpenAI did.

How did you navigate fund shortages and prepare for overcoming such situations in the future?

In Silicon Valley, the best companies start during difficult financial times. Google started in 1998, Facebook in 2003, and Amazon before the 2000 crash. I call it financial diabetes – when you get too much money, you don’t learn to spend it efficiently. We focused on high-volume, low-margin inference to stay healthy and financially disciplined.

Groq went through tough times, and we asked employees to trade salaries for equity. About 80% participated, and 50% went to the minimum salary. I took a $1 salary. Had we not done that, Groq wouldn’t exist. We had less attrition when we told employees that things were difficult. People want to be part of a team and feel trusted.

Source Link