According to sources familiar with the matter, Ant Group is utilizing Chinese-made semiconductors to train artificial intelligence models, aiming to reduce costs and decrease dependence on restricted US technology.

Alibaba’s subsidiary, Ant Group, has employed chips from domestic suppliers, including those affiliated with its parent company, Alibaba, and Huawei Technologies, to train large language models using the Mixture of Experts (MoE) method. The results obtained were reportedly comparable to those achieved with Nvidia’s H800 chips, according to sources. Although Ant continues to use Nvidia chips for some of its AI development, the company is increasingly turning to alternatives from AMD and Chinese chip-makers for its latest models.

This development indicates Ant’s deeper involvement in the growing AI competition between Chinese and US tech firms, particularly as companies seek cost-effective ways to train models. The experimentation with domestic hardware reflects a broader effort among Chinese firms to circumvent export restrictions that block access to high-end chips like Nvidia’s H800, which, although not the most advanced, remains one of the more powerful GPUs available to Chinese organizations.

Ant has published a research paper detailing its work, stating that its models performed better than those developed by Meta in some tests. Initially reported by Bloomberg News, the company’s results have not been independently verified. If the models perform as claimed, Ant’s efforts may represent a significant step forward in China’s attempt to lower the cost of running AI applications and reduce reliance on foreign hardware.

MoE models divide tasks into smaller data sets handled by separate components, gaining attention among AI researchers and data scientists. This technique has been used by Google and the Hangzhou-based startup, DeepSeek. The MoE concept is similar to having a team of specialists, each handling part of a task, making the process of producing models more efficient. Ant has declined to comment on its work with respect to its hardware sources.

Training MoE models relies on high-performance GPUs, which can be too expensive for smaller companies to acquire or use. Ant’s research focused on reducing this cost barrier. The paper’s title is suffixed with a clear objective: Scaling Models “without premium GPUs.”

The direction taken by Ant and the use of MoE to reduce training costs contrast with Nvidia’s approach. CEO Jensen Huang has stated that demand for computing power will continue to grow, even with the introduction of more efficient models like DeepSeek’s R1. His view is that companies will seek more powerful chips to drive revenue growth, rather than aiming to cut costs with cheaper alternatives. Nvidia’s strategy remains focused on building GPUs with more cores, transistors, and memory.

According to the Ant Group paper, training one trillion tokens – the basic units of data AI models use to learn – costs approximately 6.35 million yuan (roughly $880,000) using conventional high-performance hardware. The company’s optimized training method reduced this cost to around 5.1 million yuan by using lower-specification chips.

Ant plans to apply its models produced in this way – Ling-Plus and Ling-Lite – to industrial AI use cases like healthcare and finance. Earlier this year, the company acquired Haodf.com, a Chinese online medical platform, to further Ant’s ambition to deploy AI-based solutions in healthcare. It also operates other AI services, including a virtual assistant app called Zhixiaobao and a financial advisory platform known as Maxiaocai.

“If you find one point of attack to beat the world’s best kung fu master, you can still say you beat them, which is why real-world application is important,” said Robin Yu, chief technology officer of Beijing-based AI firm, Shengshang Tech.

Ant has made its models open source. Ling-Lite has 16.8 billion parameters – settings that help determine how a model functions – while Ling-Plus has 290 billion. For comparison, estimates suggest closed-source GPT-4.5 has around 1.8 trillion parameters, according to MIT Technology Review.

Despite progress, Ant’s paper noted that training models remains challenging. Small adjustments to hardware or model structure during model training sometimes resulted in unstable performance, including spikes in error rates.

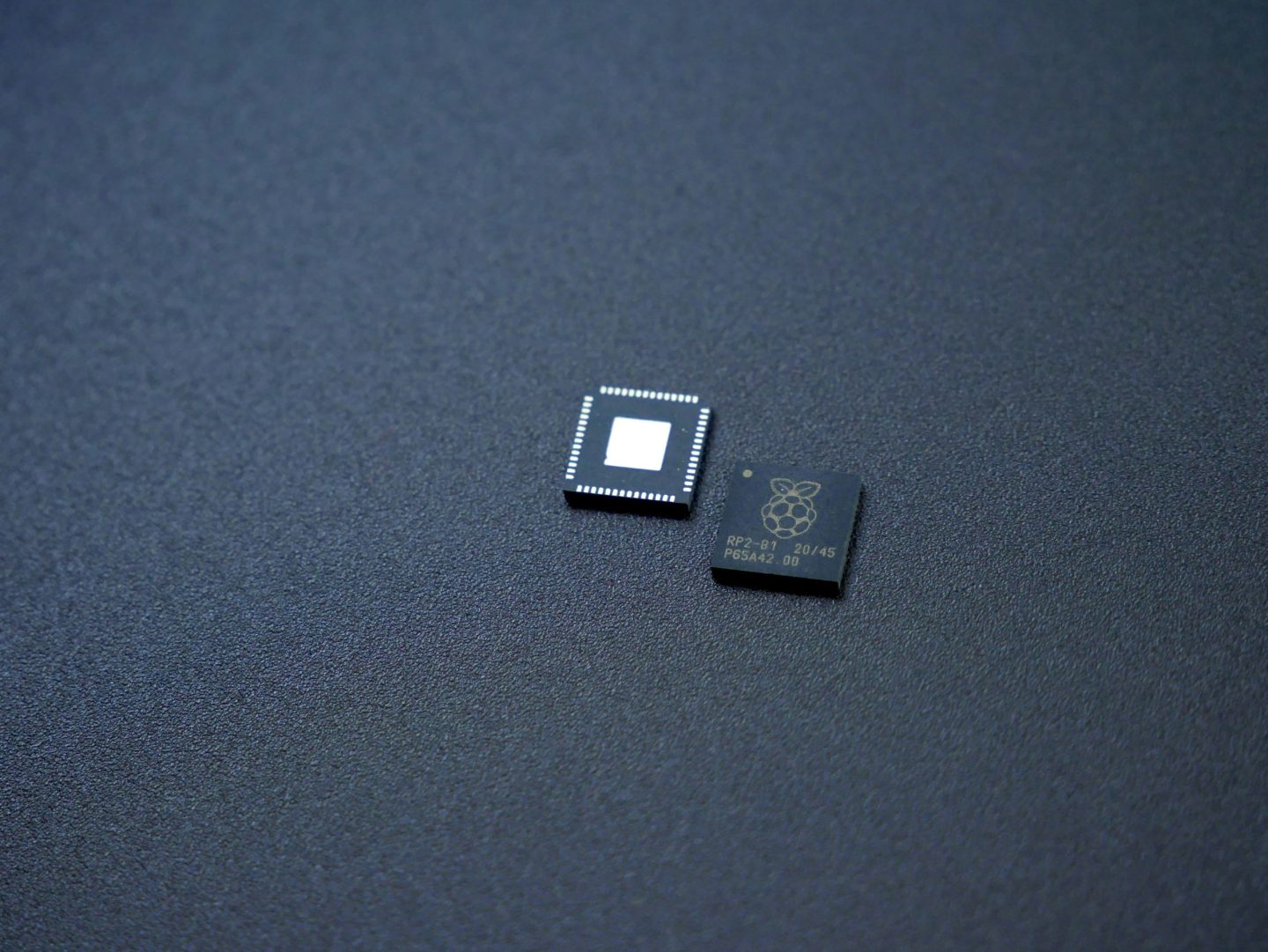

(Photo by Unsplash)

See also: DeepSeek V3-0324 tops non-reasoning AI models in open-source first

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Source Link